StatefulSet使用现有pvc存储数据

参考:https://medium.com/@kevken1000/eks-attaching-an-existing-volume-to-a-statefulset-b6d1ea9d2491

StatefulSet默认使用卷申请模板通过 storageclass 来进行持久化存储,但由于个人开发环境可能不太稳定,会经常折腾各种东西,导致nfs存储有时会需要进行变动,为了方便迁移部署,个人更喜欢使用自定义pv加pvc的方式来进行存储,虽然会牺牲掉一些sts自身扩展的便利性,但方便迁移,更适合我的环境。

首先我们得知道 StatefulSet默认使用卷申请模板申请的PVC命名规则是 {volumeClaimName}-{stsName}-{序号} ,其中volumeClaimName是volumeClaimTemplates下定义的name,stsName是 StatefulSet 的名称,最后加上序号就是完整的pvc名称。

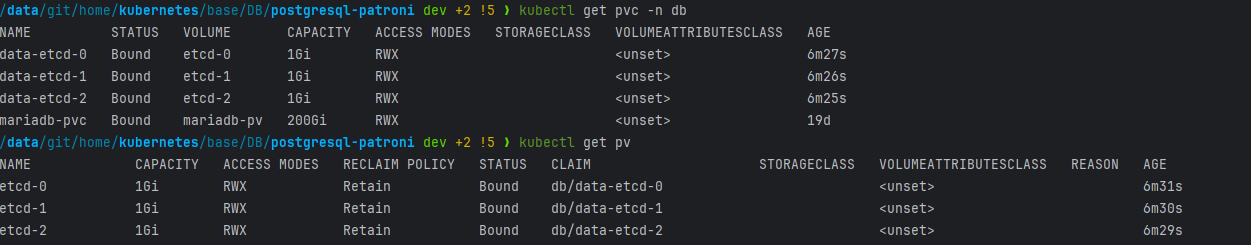

知道这个之后就简单了,我们手动创建 PVC 的逻辑其实就是手动创建符合卷申请模板 PVC 命名规则的 PVC,接下来以部署etcd集群为例,开始行动:

定义PV + PVC

apiVersion: v1

kind: PersistentVolume

metadata:

name: etcd-0

namespace: db

labels:

app: etcd-0

spec:

capacity:

storage: 1Gi

persistentVolumeReclaimPolicy: Retain

accessModes:

- ReadWriteMany

mountOptions:

- hard

- nfsvers=4.1

nfs:

server: 192.168.2.11

path: "/k8s_storage/db-etcd/pv1"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: data-etcd-0

namespace: db

spec:

accessModes: ["ReadWriteMany"]

volumeName: etcd-0

storageClassName: ""

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: etcd-1

namespace: db

labels:

app: etcd-1

spec:

capacity:

storage: 1Gi

persistentVolumeReclaimPolicy: Retain

accessModes:

- ReadWriteMany

mountOptions:

- hard

- nfsvers=4.1

nfs:

server: 192.168.2.11

path: "/k8s_storage/db-etcd/pv2"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: data-etcd-1

namespace: db

spec:

accessModes: ["ReadWriteMany"]

volumeName: etcd-1

storageClassName: ""

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: etcd-2

namespace: db

labels:

app: etcd-2

spec:

capacity:

storage: 1Gi

persistentVolumeReclaimPolicy: Retain

accessModes:

- ReadWriteMany

mountOptions:

- hard

- nfsvers=4.1

nfs:

server: 192.168.2.11

path: "/k8s_storage/db-etcd/pv3"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: data-etcd-2

namespace: db

spec:

accessModes: ["ReadWriteMany"]

volumeName: etcd-2

storageClassName: ""

resources:

requests:

storage: 1Gi定义StatefulSet

apiVersion: v1

kind: Service

metadata:

name: etcd

namespace: db

labels:

app: etcd

spec:

ports:

- name: etcd

protocol: TCP

port: 2379

targetPort: 2379

- name: peer

protocol: TCP

port: 2380

targetPort: 2380

clusterIP: None

selector:

app: etcd

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: etcd

namespace: db

spec:

selector:

matchLabels:

app: etcd

serviceName: "etcd"

replicas: 3

template:

metadata:

labels:

app: etcd

spec:

initContainers:

- name: init-etcd

image: quay.io/coreos/etcd

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

command:

- sh

- -c

- |

set -ex

cat > /etcd-data/etcd.sh << EOF

#! /bin/sh

/usr/local/bin/etcd --name=$(hostname) --advertise-client-urls=http://$(hostname).etcd.${POD_NAMESPACE}.svc.cluster.local:2379 --initial-advertise-peer-urls=http://$(hostname).etcd.${POD_NAMESPACE}.svc.cluster.local:2380 --listen-client-urls=http://0.0.0.0:2379 --listen-peer-urls=http://0.0.0.0:2380 --initial-cluster=etcd-0=http://etcd-0.etcd.${POD_NAMESPACE}.svc.cluster.local:2380,etcd-1=http://etcd-1.etcd.${POD_NAMESPACE}.svc.cluster.local:2380,etcd-2=http://etcd-2.etcd.${POD_NAMESPACE}.svc.cluster.local:2380 --data-dir=/etcd-data

EOF

chmod +x /etcd-data/etcd.sh

volumeMounts:

- name: data

mountPath: /etcd-data

containers:

- name: etcd

image: quay.io/coreos/etcd

imagePullPolicy: IfNotPresent

command:

- sh

- /etcd-data/etcd.sh

ports:

- containerPort: 2379

name: etcd

- containerPort: 2380

name: peer

volumeMounts:

- name: data

mountPath: /etcd-data

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteMany"]

storageClassName: nfs

resources:

requests:

storage: 1Gi部署完成后,进入对应目录验证是否有对应服务数据即可。

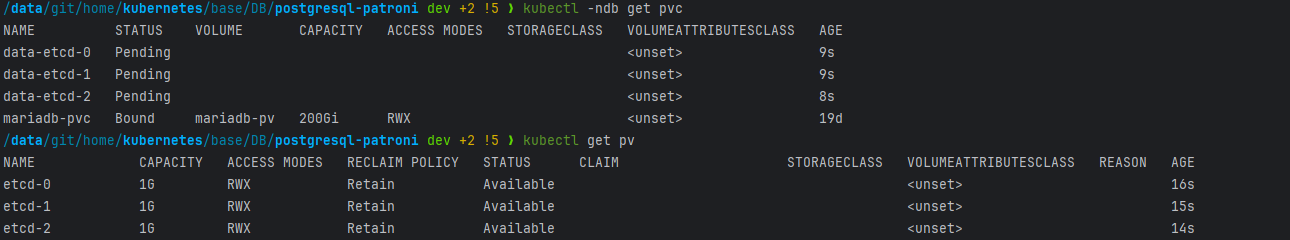

上述实验过程中PVC绑定PV的一个小问题

问题现象:

通过yml文件创建pv及pvc后,pvc和对应的pv一直未能成功绑定,pv一直为Available状态,pvc一直为pending状态,对应yml文件如下:

apiVersion: v1

kind: PersistentVolume

metadata:

name: etcd-0

namespace: db

labels:

app: etcd-0

spec:

capacity:

storage: 1G

persistentVolumeReclaimPolicy: Retain

accessModes:

- ReadWriteMany

mountOptions:

- hard

- nfsvers=4.1

nfs:

server: 192.168.2.11

path: "/k8s_storage/db-etcd/pv1"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: data-etcd-0

namespace: db

spec:

accessModes: ["ReadWriteMany"]

volumeName: etcd-0

storageClassName: ""

resources:

requests:

storage: 1Gi排查过程:

# kubectl describe pv etcd-2

Name: etcd-2

Labels: app=etcd-2

Annotations: <none>

Finalizers: [kubernetes.io/pv-protection]

StorageClass:

Status: Available

Claim:

Reclaim Policy: Retain

Access Modes: RWX

VolumeMode: Filesystem

Capacity: 1G

Node Affinity: <none>

Message:

Source:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 192.168.2.11

Path: /k8s_storage/db-etcd/pv3

ReadOnly: false

Events: <none>

# kubectl -n db describe pvc data-etcd-0

Name: data-etcd-0

Namespace: db

StorageClass:

Status: Pending

Volume: etcd-0

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 0

Access Modes:

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning VolumeMismatch 2s (x5 over 61s) persistentvolume-controller Cannot bind to requested volume "etcd-0": requested PV is too small注意到pvc报错:requested PV is too small,再次检查yml文件,发现pv的存储单位为 G ,pvc 的存储单位为Gi。由于G单位的换算是 1GB = 1000MB,而 1GiB = 1024MiB,所以有这个报错。修改配置文件如下:

apiVersion: v1

kind: PersistentVolume

metadata:

name: etcd-0

namespace: db

labels:

app: etcd-0

spec:

capacity:

storage: 1Gi

persistentVolumeReclaimPolicy: Retain

accessModes:

- ReadWriteMany

mountOptions:

- hard

- nfsvers=4.1

nfs:

server: 192.168.2.11

path: "/k8s_storage/db-etcd/pv1"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: data-etcd-0

namespace: db

spec:

accessModes: ["ReadWriteMany"]

volumeName: etcd-0

storageClassName: ""

resources:

requests:

storage: 1Gi再次部署,即正常绑定成功。